AI Realities, Myths, and Misconceptions: Comparison to Human Intelligence

Written by Bruce R. Copeland on March 31, 2023

Tags: artificial intelligence, information, learning, neural network, human brain

The recent release of several powerful artificial intelligence technologies has unleashed all kinds of responses among humans: shock at the sophistication of what these technologies can do, shock at their occasional stupidity, and in some quarters terror at the potential implications for human civilization. Before we can really understand this kind of artificial intelligence, we need to understand human intelligence.

This article is the third in a series of three articles about artificial intelligence (AI), how it works, and how it compares to human intelligence. The first article surveyed the different available types of AI, whereas the second article examined computational neural networks in some detail.

What Does the Human Brain Do (and how does it do it)?

As humans, we like to believe our intelligence is conscious and rational. The reality is FAR different. Everything in animal decision making is based ultimately on some form of neuronal network. A single neuron or set of neurons converts sensory inputs into some kind of output decision or action. The overwhelming amount of this processing is done unconsciously. Human unconscious learning is predominantly emotional. The rate and extent at which we learn something is dictated by neurotransmitters (glutamate, GABA, dopamine, etc) whose levels/activity are heavily influenced by emotion. Thus we learn some things very rapidly and others much more slowly.

Humans and likely many other animals also have a certain degree of self-awareness and consciousness. This is an important alternate system for decision making that operates substantially parallel to our unconscious — but only when we make use of it.

Using our conscious awareness we can carry out templated procedures (step A, then step B, then...). Some of us have the capability to perform complex logical analysis and higher mathematical reasoning, apparently as advanced templated procedures. But very often those of us with these capabilities find ourselves

AI Realities, Myths, and Misconceptions: Computational Neural Networks

Written by Bruce R. Copeland on April 22, 2020

Tags: artificial intelligence, information, neural network, software development

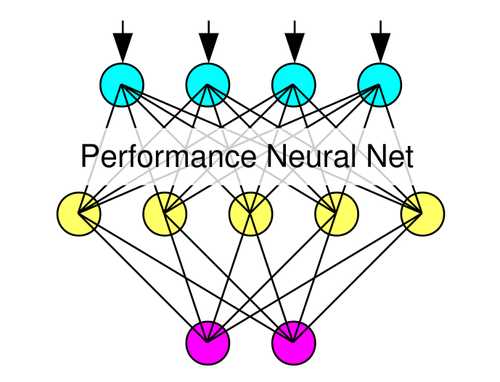

This is the second of three articles about artificial intelligence (AI), how it works, and how it compares to human intelligence. As mentioned in the first article, computational neural networks make up the overwhelming majority of current AI applications. Machine Learning is another term sometimes used for computational neural networks, although technically machine learning could also apply to other types of AI that encompass learning.

There are an extremely wide range of possible types of neural networks. Nodes can be connected/organized in many different arrangements. There are a variety of different possible node output functions: sigmoid, peaked, binary, linear, fuzzy, etc. Information flow can be feed-forward (inputs to outputs) or bidirectional. Learning can be supervised or unsupervised. Pattern sets for supervised learning can be static or dynamic. Patterns are usually independent of each other, but schemes do exist to combine sequences of related patterns for ‘delayed gratification learning’.

The overwhelming majority of computational neural networks are feed-forward using supervised learning with static training patterns. Feed-forward neural networks are pattern recognition systems. Computational versions of such systems are the equivalent of mathematical functions because only a single output (or set of output values) is obtained for any particular set of inputs.

In supervised learning, some external executive capability is used to present training patterns to the neural network, adjust overall learning rates, and carry out multiple regression (or some good approximation) to train the neural network. Many training schemes utilize gradient descent (pseudo-linear) approximations for multiple regression, but efficient nonlinear regression techniques such as Levenberg-Marquardt regression are also possible. To the extent that regression is complete, systems trained by regression produce outputs that are statistically optimal for the inputs and training pattern set on which they were trained.

All regression training schemes have some type of overarching learning rate. In nonlinear multiple reqression, the learning rate is adjusted by the regression algorithm, whereas in gradient descent regression the learning rate is set by the executive capability. One of the things that can go wrong in gradient descent approaches is to use too high a learning rate. Under such conditions, the learning overshoots the regression optimum, often producing unstable results. In engineering terms we call such a condition a driven (or over-driven) system.

Today automation techniques and Deep Learning are widely used in constructing and training neural networks. These approaches have made neural network technology much more accessible, but most of

Who Is Testing the Test?

Written by Bruce R. Copeland on April 18, 2020

Tags: covid-19, engineering, technology, testing

In these COVID-19 times, there is much talk about ‘testing’. As we get more and more pressure to reopen our economy, it is clear we have nowhere near the testing capacity or testing certainty that we really need in order to make rational decisions about who can work and where. How did we get to this point?

It may come as a surprise to hear that testing (whether software, biomedical, manufacturing…) is a complex subject involving both science and art. For starters there are many different kinds of tests even in a single domain of endeavor. (With COVID-19 for instance, there are tests for current COVID-19 infection, past exposure to COVID-19, relatedness of different COVID-19 samples, etc.) Each of these requires different (sometimes radically different) technology.

So how is a test designed? And how do we know whether, when, and why a test works (or not)? Designing a test is basically the same methodology CTOs and Chief Architects use every day for any process:

- Identify the relevant technologies

- Set forth a plan for how to connect the technologies

- Check/verify that each technology works in the intended setting (POC)

- String together the different technologies

- Verify that the entire process/test works (POC)

- Verify that you have the supply chain or needed throughput for all the steps (have legal begin drafting the necessary contracts with appropriate exigencies/penalties)

- Do a complete error analysis for the full process/test

- Develop more specific ways to identify/troubleshoot potential or actual reliability problems (test the process/test)

Sometimes you are lucky and the process/test you want is

AI Realities, Myths, and Misconceptions: Overview

Written by Bruce R. Copeland on January 21, 2020

Tags: artificial intelligence, information, software development

In the last few years Artificial Intelligence (AI) has attracted much attention and some fair bit of controversy. Unfortunately there are significant myths and misconceptions about AI. This is the first of three articles about AI: what it is, how it works, and how it compares to human intelligence.

There are actually five different basic types of artificial intelligence:

- Rule Systems are sets of logical rules that apply to specific domains of knowledge. Such systems have been widely used (in various degrees of sophistication) in software for decades. Rule systems correspond closely with human conscious logical reasoning and mathematical problem solving. The logic in rule systems is entirely deterministic, and the effectiveness of a rule system depends sensitively on the ability to identify actual facts and correctly discriminate facts from assumptions. Rule systems are occasionally used with lesser rigor in conjunction with some type(s) of heuristics. Expert systems are an example not widely used anymore in computing.

- Neural Networks are systems of connected processing nodes (including input and output nodes) that mimic biological neural systems. The connections between nodes can be organized in many ways, but nodes are typically divided into layers, and most neural networks have feed-forward (input to output) information flow. A specific combination of input data and expected output data is called a pattern. Each node (or more commonly layer) has an associated learning rate, and neural network learning can be either supervised or unsupervised. There are actually many different types of neural networks, but only one main type (feed-forward, supervised deep learning) is in wide use today. Supervised training of a neural network is accomplished by presenting multiple patterns to the network, and the resulting training is usually some approximation of mathematical regression (linear or nonlinear). Stochastic variation can be introduced as part of training, but is not a requirement. Neural networks are similar to human neural (non-conscious) data/input processing; however human neural processing is NOT regression.

-

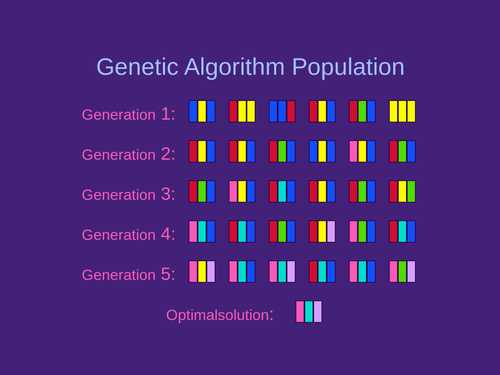

Genetic Algorithms are systems that optimize sets of possible attribute values for a system or problem by genetic reproduction/selection. A population of different possible combinations of attribute values is used. Optimization is accomplished by forming subsequent generations from the initial population according to whatever reproduction and selection models have been chosen. Optimal attribute values are usually obtained after numerous generations. The optimization is highly stochastic.

- Genetic Programming is analogous to a genetic algorithm, but the attributes/values are programming loops, decisions, order, etc used for a computer algorithm. The resulting optimization is highly stochastic. Genetic programming can be very powerful but is not widely used. It is unclear whether biological organisms might use something similar to genetic programming when optimizing the logical work flow for some kind of conscious/unconscious intelligence.

- Fuzzy Logic Fuzzy logic is really just a sophisticated form of heuristics. The architecture for fuzzy logic is typically that of a neural network, although fuzzy systems sometimes make use of specialized rules engines when propagating inputs through the fuzzy network.

Of these basic types, only rule systems and neural networks are in common use. Today when people refer to AI, they almost invariably mean

Architecting an Updated CyberSym Blogs Site

Written by Bruce R. Copeland on June 20, 2019

Tags: aws, cms, cybersym blogs, gatsby, nodejs, react, s3, wordpress

Some of you will have noticed that the CyberSym Blogs have a new look/feel. The changes are actually far more than skin deep. For a long while I’ve been dissatisfied with Joomla as a blog and content management system. The extensive php codebase used for Joomla has proved increasingly clunky. There have been frequent security problems. The mechanism for updating versions on a site is cumbersome. And maddeningly, Joomla has often made choices designed to attract new users, but problematic for existing users.

WordPress and Drupal (Joomla’s main competitors) suffer from many of the same problems (cumbersome upgrade, bloated php codebases, and frequent security problems). Over the years I’ve tested a number of other content management systems based on java, python, or javascript, but all have proved to be seriously lacking.

Recently there has been a lot of interest in headless CMS. These headless systems emphasize backend content management and leave the frontend presentation of content to other developers. As part of this trend both WordPress and Drupal have developed APIs which allow those platforms to function like a headless CMS.

Also recently there is a lot of renewed interest in static websites, which load and respond much more rapidly than most dynamic sites. Gatsby is a static site generator based on React. React is a highly popular site design framework using NodeJS javascript. In React, just about everything on a site is a component (a header, a top menu, a side widget, a page template, etc). Component files are JSX – a blend of HTML, javascript, and sometimes inline CSS. Gatsby looked like a promising way to migrate existing blogs in Joomla, WordPress, or Drupal to something much leaner. Gatsby has plugins that support the migration of WordPress or Drupal content into a Gatsby static site, but nothing for Joomla. Fortunately WordPress has a plugin that will import a Joomla site into WordPress (primarily a database migration). So the strategy was to import the Joomla blog content into WordPress and then use headless WordPress as the content source for a Gatsby static site

Can your Enterprise Afford to Ignore Agile Development?

Written by Bruce R. Copeland on May 12, 2015

Tags: agile, engineering, information, software development, technology, waterfall

I encounter plenty of companies (large and small) that are still trying to decide about agile software development: Does it really work? We already have software that works; why change? Agile software seems to require so much engagement between business product people and development teams. Is this really necessary? Can it work for us?

I get it—Change is Hard. So

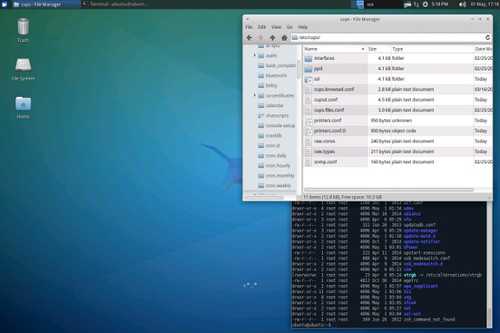

A New Raspberry Pi 2 Running Ubuntu 14.04 LTS

Written by Bruce R. Copeland on May 04, 2015

Tags: automation, backup, firewall, headless, information, internet of things, linux, raspberry pi, security, technology, ubuntu, update, usb, wireless

A few weeks ago I received a new Raspberry Pi 2, complete with case, 32 GB (class 10) SDHC card, 5V/2A power supply, and Edimax EW-7811UN USB wireless dongle. The Pi 2 is an ARMv7 quad processor system with 1 GB of RAM. This is comparable to most better smartphones and tablets, but about 20-fold slower than the 8GB Intel i7 system I routinely use for high-end software development.

Most Raspberry Pi users find it easiest to begin with the basic Raspbian linux distributions available from the Raspberry Pi Federation. However I have been using diverse unix/linux systems heavily for more than 16 years. Currently all the computers in my company run either Ubuntu 12.04 LTS or Ubuntu 14.04 LTS. It is significantly easier to administer systems that mostly utilize the same packages and tools. Also the Ubuntu long term support (LTS) feature has been invaluable on several past occasions when we were involved in complex projects and would not have had time to upgrade other shorter-lived distributions nearing their end of life for security support. Ryan Finnie has recently provided a nice build of Ubuntu 14.04 LTS for Rasberry Pi. So it made sense to start with Ubuntu 14.04 LTS on my new Raspberry Pi

Health Care Reform: A Data Management Problem

Written by Bruce R. Copeland on September 09, 2009

Tags: actuarial, competition, costs, data management, health care, insurance, market, oversight, privacy, statistics, universal standards

Tonight President Obama will address Congress (and the nation) about health care reform.

I support health care reform. However I have reservations about the current health care reform package slated for vote in the Senate (as well as other health care reform proposals on Capital Hill from both parties). It’s not that I believe the bald-faced lies which reactionary conservative are leveling. Rather my lack of enthusiasm stems from the fact that none of these packages really address the critical underlying problems in our health care system—overly high costs and comparatively poor outcomes. I think many other Americans have the same reservations. So, what is missing?

Our health care problems are fundamentally data management problems. The first problem is that we do not have sufficiently comprehensive data on health conditions and costs. The second problem is that we have not had any MEANINGFUL actuarial oversight of health insurance companies for 25 years. These are data collection and analysis failures, and we MUST fix them if we are to fix our health care system.

We will not understand why our health care is so expensive and our outcomes so relatively poor until we begin collecting and analyzing comprehensive health data. Likewise we cannot expect meaningful competition in health insurance markets until consumers know the cost risks

Doing More with Modules in Joomla 1.5

Written by Bruce R. Copeland on February 16, 2009

Tags: blog, cms, editor, information, joomla, modules, openid, template

Modules can help solve a wide range of problems in a web site that uses Joomla, but many Joomla site designers do not really know how to fully utilize modules. Eight months ago when I began designing the CyberSym Blogs site, I had some definite ideas about what I wanted. At that time I was fairly new to Joomla, and Joomla has a rather long and steep learning curve. Nevertheless after one somewhat false start, I managed to come up with a reasonably straightforward Joomla 1.5 design for the site.

A few things have however been headaches. The default Joomla 1.5 Syndication module doesn’t allow customization