AI Realities, Myths, and Misconceptions: Computational Neural Networks

Written by Bruce R. Copeland on April 22, 2020

Tags: artificial intelligence, information, neural network, software development

This is the second of three articles about artificial intelligence (AI), how it works, and how it compares to human intelligence. As mentioned in the first article, computational neural networks make up the overwhelming majority of current AI applications. Machine Learning is another term sometimes used for computational neural networks, although technically machine learning could also apply to other types of AI that encompass learning.

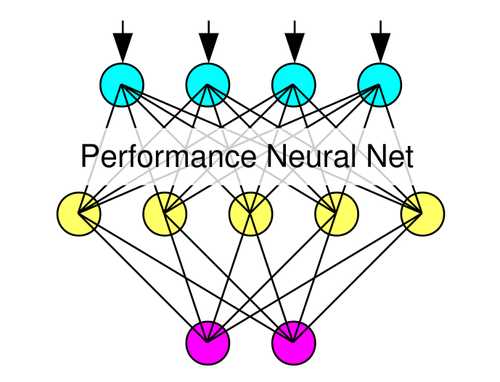

There are an extremely wide range of possible types of neural networks. Nodes can be connected/organized in many different arrangements. There are a variety of different possible node output functions: sigmoid, peaked, binary, linear, fuzzy, etc. Information flow can be feed-forward (inputs to outputs) or bidirectional. Learning can be supervised or unsupervised. Pattern sets for supervised learning can be static or dynamic. Patterns are usually independent of each other, but schemes do exist to combine sequences of related patterns for ‘delayed gratification learning’.

The overwhelming majority of computational neural networks are feed-forward using supervised learning with static training patterns. Feed-forward neural networks are pattern recognition systems. Computational versions of such systems are the equivalent of mathematical functions because only a single output (or set of output values) is obtained for any particular set of inputs.

In supervised learning, some external executive capability is used to present training patterns to the neural network, adjust overall learning rates, and carry out multiple regression (or some good approximation) to train the neural network. Many training schemes utilize gradient descent (pseudo-linear) approximations for multiple regression, but efficient nonlinear regression techniques such as Levenberg-Marquardt regression are also possible. To the extent that regression is complete, systems trained by regression produce outputs that are statistically optimal for the inputs and training pattern set on which they were trained.

All regression training schemes have some type of overarching learning rate. In nonlinear multiple reqression, the learning rate is adjusted by the regression algorithm, whereas in gradient descent regression the learning rate is set by the executive capability. One of the things that can go wrong in gradient descent approaches is to use too high a learning rate. Under such conditions, the learning overshoots the regression optimum, often producing unstable results. In engineering terms we call such a condition a driven (or over-driven) system.

Today automation techniques and Deep Learning are widely used in constructing and training neural networks. These approaches have made neural network technology much more accessible, but most of the underlying principles (convolution, reverse transforms, advanced regression, AI to build AI) have been in use/existence for a couple decades.

Clearly there is much complexity in the design and training of computational neural networks. Nevertheless there are a number of best practices:

- Use a training/analysis dataset that is in total at least 10 times the size of a training set.

- Involve a good data scientist with appropriate domain knowledge when building and applying the dataset for training and testing.

- Do pre-process the patterns used for training and results.

- Train/test early and often.

- Try a variety of different node (layer) layouts for the neural network.

- Thoroughly examine the performance of any promising architecture (best practice 3) by using different random training and test subsets from the dataset.

- Carefully analyze the neural network error for different scenario subsets of the data set. This is the only good way to identify bias.

- Do a principal component analysis of the trained neural network to identify nodes (including input nodes) that may be unnecessary (and undesirable).

- Do a sensitivity analysis on different important classes of patterns.

The total training/analysis dataset must include all scenarios likely to be encountered in actual use, and those scenarios need to be present in the dataset in representative amounts. The number of patterns in any training set must also be MUCH larger than the total number of parameters (connection weights) for all nodes. In addition, the dataset should be substantially larger than the number of patterns needed for successful training. This ensures that it is possible to use one random subset of the dataset for training and another random subset for testing and then randomly repeat this division multiple times.

The involvement of a good data scientist is essential, and that role requires solid domain knowledge about the target AI application area. The data scientist curates the dataset to guarantee that it is complete and representative, that it does not contain incorrect data, and that it is sufficiently large. A good data scientist also helps in the analysis of a trained neural network by ensuring that it is possible to produce subsets of the dataset which represent specific data scenarios that the neural network must handle appropriately. In this regard, the value of housing the training data in a database cannot be overemphasized. A database makes it possible to have additional fields which are not included in the training patterns but which streamline identification of classes of scenarios which the neural network must handle properly.

Training and neural network performance are always greatly improved when pattern inputs and outputs are pre-processed. Pre-processing can be anything from simple scaling to squashing or sometimes even development of metadata. It could be part of a data scientist’s role, although more commonly it involves multiple members of a development team. It is even possible to use another (trained) neural network to carry out the pre-processing for a second more sophisticated neural network.

Two other best practices, principal component analysis and sensitivity analysis, go a long way toward guaranteeing that a neural network can generalize and is not missing essential inputs. In addition generalization is the reason why the total number of connection weights for all the neural network nodes need to be MUCH smaller than the number of training patterns. Otherwise the neural network could simply memorize the training patterns instead of generalizing.

No coverage of neural networks (computational or biological) would be complete without some discussion of linear versus nonlinear behavior. The world around us is heavily nonlinear — despite our frequent attempts to view it in simplistic, linear terms. Most neural network node output functions have one or more regions of significant nonlinearity, so neural networks certainly have the capability to handle nonlinear behavior. Training regression methods also matter. It is risky, but in some cases possible, to use linear regression to train a neural network that handles nonlinear behavior. Clearly however it is safer (and probably a lot faster) to use nonlinear multiple regression methods for such neural networks. Any neural network that shows switching behavior is nonlinear (automation for example), but a great many other real world scenarios also involve nonlinear behavior. It is safe to say that as neural networks are applied to ever more complex problems, nonlinear behavior will be a nearly universal characteristic.

A common complaint about neural networks is that their reasoning is opaque. This complaint reflects the fact that the underlying decision (truth) tree for a neural network is not clearly spelled out the way rules are in a ruleset. This is a characteristic shared by unconscious biological neural systems, which actually perform most of the decision processing in humans and animals. It is important to recognize that much of the opacity of neural networks actually results from the complexity of the problems to which they are applied. It is also important to note that neural networks can generally be reduced to decision trees in which the decision boundaries occur at specific combinations of double precision number values. This is similar to what happens with rulesets in high performance rules engines. Finally on a practical level, much of the reasoning in a computational neural network can be inferred through careful application of principal component analysis and sensitivity analysis techniques.

Bias is another criticism recently leveled at AI in the press. This is really a case of the news media treating a subject too simplistically or ‘getting it wrong.’ Several prominent AI projects DO currently suffer from significant bias. On the other hand there are entire subsets of AI (such as rulesets applied to facts) where bias has no meaning. Bias originates in neural networks when there are insufficient inputs or the dataset is incomplete. Bias is therefore not a criticism of AI (or even neural networks) per se. Rather bias results from software development projects that fail to follow best practices.

The next article in this series will examine the similarities and differences between human and artificial intelligence.