An Equivalent Distribution Simplification for Total Social Security Benefit Payments

Written by Bruce R. Copeland on May 03, 2025

Tags: annuity, data science, economics, finance, government, pension, retirement, social security, statistics

A few readers have requested some additional explanation of the equivalent distribution simplification that I used in my previous data science article about Social Security. That article relies on a present value comparison between total benefits received and total contributions made for the cohort of workers who reach full retirement age in any given year.

Tracking the benefits for a cohort presents several problems: i) payments have to be followed years into the future; ii) the Social Security Administration (SSA) doesn't really publish enough detail in order to properly tease out the benefits that are unique to a given cohort; iii) accurate handling of dependent (spouse, children, parents, etc.) benefits can be even more complicated than that for workers. To skirt these problems I recognized and used an equivalent distribution simplification in which total benefits paid in any year are a very good statistical representation of the total payments which would be made to that year's retirement cohort over time.

Table I here shows schematically how/why the equivalent distribution simplification works.

The leftmost column in Table I designates different years before and after the year a cohort of workers reach full retirement age. To keep the explanation abstract I have used 0 rather than a specific year for the retirement year, and I have designated years before and after the retirement year with an appropriate integer (plus or minus).

The two columns left of the vertical…

An Analysis of Social Security Data: Not the Picture You Have Been Told!

Written by Bruce R. Copeland on April 06, 2025

Tags: annuity, data science, economics, finance, government, pension, retirement, social security

It is accurate to say that few people understand the United States Social Security system very well, and sadly this appears to include most members of Congress and even the Social Security Administration (SSA) itself. I do not expect this article will completely change the situation, but perhaps it may help to correct some of the more serious problems with Social Security funding and administration.

Over the years, the U.S. Social Security program has come under fire for various things. Some of the better-known criticisms: an entitlement, a money pit for the federal government, the reason for an ever-expanding federal budget deficit, a Ponzi scheme, etc. In recent years, a number of politicians have started claiming current Social Security beneficiaries are getting too much in benefits, and this is why the system is in trouble. One variation on this latter argument claims the current cadre of beneficiaries (boomers born between 1946 and 1964) are simply too numerous for the system.

What Is Social Security?

So what is Social Security? It is a government retirement program which most workers are entitled to use, and therefore in a very narrow technical sense it is an entitlement. On the other hand the term entitlement usually means something you get because of who you are instead of what you have done. For the retirement portion of Social Security (officially Old-Age and Survivors Insurance or OASI), beneficiary payments are all about what workers have contributed to Social Security, so the latter meaning of an entitlement clearly does NOT apply.

Functionally Social Security is closest to a pension or lifetime income annuity in which payments come from both workers and their employers. (It has some features of both defined benefit and defined contribution pension plans.) Contributions to pensions and annuities are invariably invested, and then benefits are paid at retirement from the investment gain and some of the investment principal. The main question with pensions and annuities is always 'how much in benefits are you receiving compared to what you (and your employers) paid in?' This is actually a great question to ask about Social Security.

Before looking at this question, it is important to agree on a few terms and concepts. I am going to use the term 'retirement' to mean the age at which a worker is eligible for full Social Security benefits. Right now this is age 66 for most workers, but it has been age 65 at some points in the past, and it could be age 67 in the near future. Some workers actually begin taking reduced benefits as early as age 62, and some wait until age 70 to begin taking higher benefits. I will not distinguish between those who take early or late or regular full benefits because the total amounts early and late beneficiaries receive are built into the system to be financially equivalent to the amount an individual receives at normal full retirement. Also I note that some Social Security beneficiaries work after they begin getting Social Security benefits. This does not really complicate the definition of retirement I am using, but it will make it necessary to include any income contributions these retired workers make to Social Security. The term Social Security benefits also needs some explanation. OASI makes payments to workers and in many cases their spouses and other dependents (usually children). I am going to include all these payments as worker 'benefits'. SSA also has other more minor programs covering disability, etc. These are significantly smaller in overall amounts and are administered somewhat separate from worker/spouse/dependents benefits. I am therefore going to exclude disability benefits in the analysis here. Finally Social Security contributions come from both employers and employees, but both amounts are based on worker income. Henceforth I will refer to the total of such contributions as worker contributions.

What Can Be Learned From Social Security Data?

SSA like most federal government agencies makes extensive amounts of data about their operations available. Every year the SSA publishes a Trust Report which summarizes that year's operations along with some historical context and estimates for the future. Table I (columns A-M) includes one of the tables (Table VI.A1.— Operations of the OASI Trust Fund, Calendar Years 1937-2023) from the SSA Trust Report for 2024. This table contains the historical yearly worker contributions to Social Security and yearly benefits paid out to retired workers and/or their dependents along with quite a bit of additional supporting information. Table I is a perfect starting point for a comparison of total worker contributions to Social Security and total benefits paid to workers. There is a lot of information in this table; so I have highlighted the three columns of SSA data (dark blue, purple, and green) which are of primary interest for this analysis.

Image by Alexander from PixabaySome aspects of the data analysis for this table are complex. Before talking in detail about the data analysis, I think it is helpful to jump ahead to the results. Figure 1 really shows it all. This is a comparison of Lifetime Worker Payroll Contributions (blue) with Lifetime Worker/Dependent Benefits (magenta) for each Year at Full Retirement (age 66) from 1948 to 2023. Social Security and the SSA were still fairly new in 1948, and the first 10 or so years of data after 1948 are somewhat sketchy. There are however some clear trends. For most of the first forty years of this plot, SSA was paying out substantially more in retired worker/dependent benefits than workers had actually contributed. Figure 1 also shows the ratio of the value of the SSA trust fund as a percent of yearly benefits (green). This curve clearly confirms what the combination of the other two curves shows. By 1980, the Social Security Trust Fund was nearly insolvent because benefits were significantly exceeding lifetime worker contributions.

Interestingly the Social Security Trust Fund did not go broke after 1980. What changed?

AI Realities, Myths, and Misconceptions: Comparison to Human Intelligence

Written by Bruce R. Copeland on March 31, 2023

Tags: artificial intelligence, information, learning, neural network, human brain

The recent release of several powerful artificial intelligence technologies has unleashed all kinds of responses among humans: shock at the sophistication of what these technologies can do, shock at their occasional stupidity, and in some quarters terror at the potential implications for human civilization. Before we can really understand this kind of artificial intelligence, we need to understand human intelligence.

This article is the third in a series of three articles about artificial intelligence (AI), how it works, and how it compares to human intelligence. The first article surveyed the different available types of AI, whereas the second article examined computational neural networks in some detail.

What Does the Human Brain Do (and how does it do it)?

As humans, we like to believe our intelligence is conscious and rational. The reality is FAR different. Everything in animal decision making is based ultimately on some form of neuronal network. A single neuron or set of neurons converts sensory inputs into some kind of output decision or action. The overwhelming amount of this processing is done unconsciously. Human unconscious learning is predominantly emotional. The rate and extent at which we learn something is dictated by neurotransmitters (glutamate, GABA, dopamine, etc) whose levels/activity are heavily influenced by emotion. Thus we learn some things very rapidly and others much more slowly.

Humans and likely many other animals also have a certain degree of self-awareness and consciousness. This is an important alternate system for decision making that operates substantially parallel to our unconscious — but only when we make use of it.

Using our conscious awareness we can carry out templated procedures (step A, then step B, then...). Some of us have the capability to perform complex logical analysis and higher mathematical reasoning, apparently as advanced templated procedures. But very often those of us with these capabilities find ourselves

AI Realities, Myths, and Misconceptions: Computational Neural Networks

Written by Bruce R. Copeland on April 22, 2020

Tags: artificial intelligence, information, neural network, software development

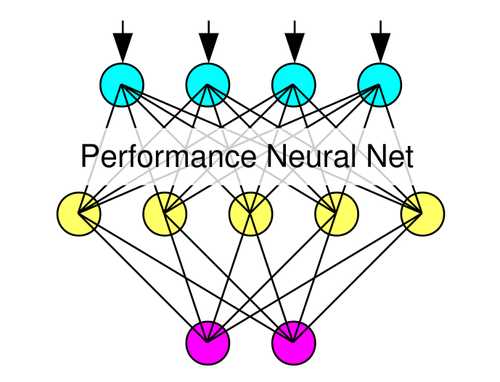

This is the second of three articles about artificial intelligence (AI), how it works, and how it compares to human intelligence. As mentioned in the first article, computational neural networks make up the overwhelming majority of current AI applications. Machine Learning is another term sometimes used for computational neural networks, although technically machine learning could also apply to other types of AI that encompass learning.

There are an extremely wide range of possible types of neural networks. Nodes can be connected/organized in many different arrangements. There are a variety of different possible node output functions: sigmoid, peaked, binary, linear, fuzzy, etc. Information flow can be feed-forward (inputs to outputs) or bidirectional. Learning can be supervised or unsupervised. Pattern sets for supervised learning can be static or dynamic. Patterns are usually independent of each other, but schemes do exist to combine sequences of related patterns for ‘delayed gratification learning’.

The overwhelming majority of computational neural networks are feed-forward using supervised learning with static training patterns. Feed-forward neural networks are pattern recognition systems. Computational versions of such systems are the equivalent of mathematical functions because only a single output (or set of output values) is obtained for any particular set of inputs.

In supervised learning, some external executive capability is used to present training patterns to the neural network, adjust overall learning rates, and carry out multiple regression (or some good approximation) to train the neural network. Many training schemes utilize gradient descent (pseudo-linear) approximations for multiple regression, but efficient nonlinear regression techniques such as Levenberg-Marquardt regression are also possible. To the extent that regression is complete, systems trained by regression produce outputs that are statistically optimal for the inputs and training pattern set on which they were trained.

All regression training schemes have some type of overarching learning rate. In nonlinear multiple reqression, the learning rate is adjusted by the regression algorithm, whereas in gradient descent regression the learning rate is set by the executive capability. One of the things that can go wrong in gradient descent approaches is to use too high a learning rate. Under such conditions, the learning overshoots the regression optimum, often producing unstable results. In engineering terms we call such a condition a driven (or over-driven) system.

Today automation techniques and Deep Learning are widely used in constructing and training neural networks. These approaches have made neural network technology much more accessible, but most of

Who Is Testing the Test?

Written by Bruce R. Copeland on April 18, 2020

Tags: covid-19, engineering, technology, testing

In these COVID-19 times, there is much talk about ‘testing’. As we get more and more pressure to reopen our economy, it is clear we have nowhere near the testing capacity or testing certainty that we really need in order to make rational decisions about who can work and where. How did we get to this point?

It may come as a surprise to hear that testing (whether software, biomedical, manufacturing…) is a complex subject involving both science and art. For starters there are many different kinds of tests even in a single domain of endeavor. (With COVID-19 for instance, there are tests for current COVID-19 infection, past exposure to COVID-19, relatedness of different COVID-19 samples, etc.) Each of these requires different (sometimes radically different) technology.

So how is a test designed? And how do we know whether, when, and why a test works (or not)? Designing a test is basically the same methodology CTOs and Chief Architects use every day for any process:

- Identify the relevant technologies

- Set forth a plan for how to connect the technologies

- Check/verify that each technology works in the intended setting (POC)

- String together the different technologies

- Verify that the entire process/test works (POC)

- Verify that you have the supply chain or needed throughput for all the steps (have legal begin drafting the necessary contracts with appropriate exigencies/penalties)

- Do a complete error analysis for the full process/test

- Develop more specific ways to identify/troubleshoot potential or actual reliability problems (test the process/test)

Sometimes you are lucky and the process/test you want is

AI Realities, Myths, and Misconceptions: Overview

Written by Bruce R. Copeland on January 21, 2020

Tags: artificial intelligence, information, software development

In the last few years Artificial Intelligence (AI) has attracted much attention and some fair bit of controversy. Unfortunately there are significant myths and misconceptions about AI. This is the first of three articles about AI: what it is, how it works, and how it compares to human intelligence.

There are actually five different basic types of artificial intelligence:

- Rule Systems are sets of logical rules that apply to specific domains of knowledge. Such systems have been widely used (in various degrees of sophistication) in software for decades. Rule systems correspond closely with human conscious logical reasoning and mathematical problem solving. The logic in rule systems is entirely deterministic, and the effectiveness of a rule system depends sensitively on the ability to identify actual facts and correctly discriminate facts from assumptions. Rule systems are occasionally used with lesser rigor in conjunction with some type(s) of heuristics. Expert systems are an example not widely used anymore in computing.

- Neural Networks are systems of connected processing nodes (including input and output nodes) that mimic biological neural systems. The connections between nodes can be organized in many ways, but nodes are typically divided into layers, and most neural networks have feed-forward (input to output) information flow. A specific combination of input data and expected output data is called a pattern. Each node (or more commonly layer) has an associated learning rate, and neural network learning can be either supervised or unsupervised. There are actually many different types of neural networks, but only one main type (feed-forward, supervised deep learning) is in wide use today. Supervised training of a neural network is accomplished by presenting multiple patterns to the network, and the resulting training is usually some approximation of mathematical regression (linear or nonlinear). Stochastic variation can be introduced as part of training, but is not a requirement. Neural networks are similar to human neural (non-conscious) data/input processing; however human neural processing is NOT regression.

-

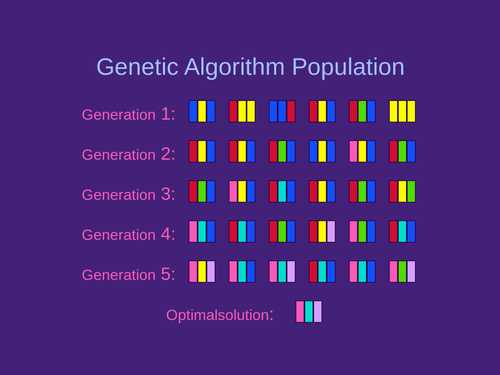

Genetic Algorithms are systems that optimize sets of possible attribute values for a system or problem by genetic reproduction/selection. A population of different possible combinations of attribute values is used. Optimization is accomplished by forming subsequent generations from the initial population according to whatever reproduction and selection models have been chosen. Optimal attribute values are usually obtained after numerous generations. The optimization is highly stochastic.

- Genetic Programming is analogous to a genetic algorithm, but the attributes/values are programming loops, decisions, order, etc used for a computer algorithm. The resulting optimization is highly stochastic. Genetic programming can be very powerful but is not widely used. It is unclear whether biological organisms might use something similar to genetic programming when optimizing the logical work flow for some kind of conscious/unconscious intelligence.

- Fuzzy Logic Fuzzy logic is really just a sophisticated form of heuristics. The architecture for fuzzy logic is typically that of a neural network, although fuzzy systems sometimes make use of specialized rules engines when propagating inputs through the fuzzy network.

Of these basic types, only rule systems and neural networks are in common use. Today when people refer to AI, they almost invariably mean

Health Care Reform: A Data Management Problem

Written by Bruce R. Copeland on September 09, 2009

Tags: actuarial, competition, costs, data management, health care, insurance, market, oversight, privacy, statistics, universal standards

Tonight President Obama will address Congress (and the nation) about health care reform.

I support health care reform. However I have reservations about the current health care reform package slated for vote in the Senate (as well as other health care reform proposals on Capital Hill from both parties). It’s not that I believe the bald-faced lies which reactionary conservative are leveling. Rather my lack of enthusiasm stems from the fact that none of these packages really address the critical underlying problems in our health care system—overly high costs and comparatively poor outcomes. I think many other Americans have the same reservations. So, what is missing?

Our health care problems are fundamentally data management problems. The first problem is that we do not have sufficiently comprehensive data on health conditions and costs. The second problem is that we have not had any MEANINGFUL actuarial oversight of health insurance companies for 25 years. These are data collection and analysis failures, and we MUST fix them if we are to fix our health care system.

We will not understand why our health care is so expensive and our outcomes so relatively poor until we begin collecting and analyzing comprehensive health data. Likewise we cannot expect meaningful competition in health insurance markets until consumers know the cost risks

High-Fructose Corn Syrup: Villain or Scapegoat?

Written by Bruce R. Copeland on August 27, 2009

Tags: autism, blood sugar, cortisol, fructose, glucagon, glucose, glycogen, high fructose corn syrup, honey, insulin, misunderstanding, sucrose

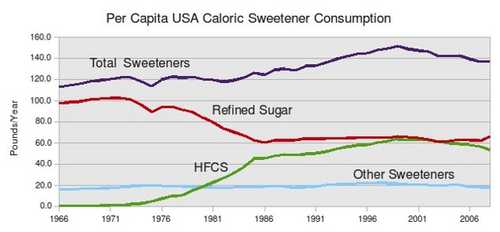

Recently a friend posed the question: “Could a change in carbohydrate source affect protein formation?” The context for this question was a discussion about whether the shift to corn carbohydrate (principally high-fructose corn syrup) over the last 30 years could have somehow produced the apparent rise in autism? [This latter question might just as easily involve any of several other health conditions besides autism that have also shown a steep rise over the past 30 years.] In principle, the answer to both questions is YES, but not in the way you might expect. Both of these questions really center around the effects of high-fructose corn syrup (HFCS) in our diet. Many people have recently become quite concerned about possible adverse effects from fructose consumption. The effects of fructose and glucose consumption are complicated and not completely understood. In the end, however, HFCS is probably more of concern because of its contribution to our overall increased consumption of simple sugars rather than because of any specific fructose effect.

Carbohydrate is an essential part of the human diet. Carbohydrates range from simple sugars (glucose, fructose, galactose) to complex chains of those sugars. The more complex the carbohydrate, the slower its rate of digestion. In fact, some complex carbohydrates are effectively indigestible (fiber). Because carbohydrate is so important

Mortgage Crisis, Recession, and Bailout: Reconciling the Numbers

Written by Bruce R. Copeland on January 22, 2009

Tags: banking, credit, economics, foreclosure, investment, mortgage, payroll, recession, treasury bailout

Four months into a recession spawned by the mortgage investment banking crisis, it is worth doing some basic math on the real costs.

First some background: I supported the original treasury bailout, albeit with two important qualifications. First, I wanted the proposed $750 billion bailout to be authorized by Congress in three chunks of $250 billion instead of two chunks of $375 billion. Second, I noted that the projected actual losses from home foreclosure (at that time ~$200 billion) were a fraction of the total $750 billion requested by Dept. of Treasury for bailout. I suggested it would be safest in the long run to use the bulk of the bailout to buy up troubled mortgages or their underlying properties.

With an intensifying recession, I regret not pushing harder on these two points, or at least I regret that my congressional representatives and their colleagues didn’t listen better. The incoming Obama administration has proposed that as much as $800 billion of stimulus may be necessary to deal with the recession (on top of the $400 billion of TARP that the Bush administration has spent). Other estimates of recessionary losses are as much as $1400 billion (10% of GDP)

Estimating Nationwide Recessionary Losses from Mortgage Foreclosure

Written by Bruce R. Copeland on January 21, 2009

Tags: consumer spending, economics, foreclosure, home equity, housing bubble, job mobility, mortgage, real estate, recession

At the height of the housing bubble, the nationwide value of residential real estate was commonly estimated to be $20 – $25 trillion. The current national foreclosure rate is about 2% (and climbing). The average DECREASE in property value for foreclosed residential real estate is less than 50%. This yields (0.5)*(0.02)*($25 trillion) = $250 billion as a current estimate of the total loss associated with failed mortgages—probably an overestimate!

This number is lower than other loss estimates originally reported for the mortgage meltdown. Early estimates often reported the dollar value of troubled mortgages. This is highly misleading for three reasons: First, not all troubled mortgages end up in foreclosure. Second the value of a mortgage includes the total principal, whereas the average decrease in home value (the possible loss) is less than 50% of the principal. Third, these estimates include the mortgage interest over the life of the loan—more than double the original principal value. Mortgages get paid off all the time when homeowners sell, and no mortgage holder ever gets to treat 10 or 20 years of missed interest opportunity (over the original life of the mortgage) as a “loss”

The Economic Reality of the Laws of Thermodynamics

Written by Bruce R. Copeland on October 15, 2008

Tags: 1st law, 2nd law, 3rd law, economics, engineering, entropy, financial derivative, humpty dumpty, investment scheme, laws of thermodynamics, market, perpetual motion, risk, science, thermodynamics

In previous articles, I’ve written about the importance of the fundamental laws of nature. Paramount among these are the Laws of Thermodynamics. The Laws of Thermodynamics apply to everything, including economic activity!

Early statements of the Laws of Thermodynamics were framed in terms of heat and heat engines. Even today most engineering and science students are introduced to thermodynamics through heat principles. The 1st Law of Thermodynamics (Conservation of Energy) states that total energy (work done by/on a system plus heat transferred to/from a system) is conserved in a closed system. The 2nd Law says that heat is converted incompletely into work (dissipated) or that efficiency is never complete. The 3rd Law says that absolute zero temperature is unattainable.

More popular modern statements of the 2nd Law state that entropy (a measure of disorder) is constantly increasing for any closed system. While the 2nd Law is sometimes difficult for humans to accept, it is interesting that there are well known colloquialisms which incorporate the underlying meaning of the 2nd Law. Good examples are Murphy’s Law (“Anything that can go wrong will go wrong”) and the nursery rhyme, “Humpty Dumpty sat on a wall. Humpty Dumpty had a great fall. All the King’s horses and all the King’s men couldn’t put Humpty together again.

Reprinted and modified with permission from Scott J. Wakefield (http://www.scottjwakefield.com)

Although most formal applications of the Laws of Thermodynamics are found in the sciences and engineering

Economic Crisis—We Have an Ego Problem Here

Written by Bruce R. Copeland on September 30, 2008

Tags: economics, ego, fraud, main street, mortgage, real estate, sound bite, wall street

There is more than enough blame to go around for the current U.S. economic crisis, and we won’t solve the crisis until we face our ego problem.

We have an ego problem in a President of the United States who can’t/won’t go to the American People with a giant Mea Culpa, acknowledging that his administration took its eye off the ball for a long time.

We have an ego problem in a Treasury Secretary who can spend hours and days trying to broker a deal with Congress, but can’t take the time to explain directly to the American People EXACTLY and THOROUGHLY what the problem is with our financial markets.

We have an ego problem in corporate leaders on Wall Street who would rather allow their companies to go bust

Critical Thinking: Sooner or Later, Your Assumptions Will Kill You

Written by Bruce R. Copeland on September 04, 2008

Tags: assumption, critical thinking, extrapolation, hypothesis, law of nature, science, scientific reasoning, theory, uncertainty principle

This is literally true.

An assumption is something we think is true, but which we have not thoroughly tested or proven. Often it is based on an extrapolation or a parallel. Careful handling of assumptions is essential to critical thinking.

Everyone makes assumptions. It’s pretty much the only way to get anything done, given that it is impossible to have complete knowledge about everything (a law of nature called the Uncertainty Principle)

It’s the Law!

Written by Bruce R. Copeland on September 01, 2008

Tags: 1st law, 2nd law, 3rd law, conservation of energy, conservation of momentum, gravity, law, law of natural selection, law of nature, mechanism, misunderstanding, science, stability, uncertainty principle

Many of you have heard the humorous expression or seen the bumper sticker “Gravity: It’s not just a good idea. It’s the Law!” No one really seems to doubt the Law of Gravity, though more than a few of us occasionally fail to take full account of it. But obscured by the politicization of science and the rancorous public debates over evolution (The Law of Natural Selection, etc.) and climate change is the fact that science recognizes and codifies a number of important laws of nature. Like the Law of Gravity, most of these laws are not even remotely in question. Yet whether from indifference or lack of science education, many people seem to be either unaware of the importance of these laws or else have come to believe that they somehow don’t apply. Nothing could be further from the truth